As an artificial intelligence engineer, you’ll have had the opportunity to work across industries. Artificial intelligence and machine learning can be applied to a variety of data sources, including text, image and audio, in everything from fintech to experimental music compositions. Deep learning algorithms are becoming more powerful every day; for example, new generative models have the ability to create “pictures” of an environment via interaction and observation, even when the data is incomplete.

Certainly, in corporate and governmental contexts alike, leaders are curious about the possibilities machine learning algorithms present, especially in regard to incomplete data and unknown scenarios. However, this comes with some hesitancy. Although they’re intrigued, the obscurity of the technology leads to reticence, largely related to the price tag. Nevertheless, this isn’t to say it’s not an invaluable investment – if executed with the right support.

Table of Contents

The body vs. the mind in AI projects

An artificial intelligence engineer will begin a project with data exploration. Data exploration is a complex process, where one has to understand the quality of the data, and subsequently, the complexity of the patterns the AI tool will be required to learn. Then, you need to assess the volume of data, build an algorithmic architecture, and train the model to achieve the desired performance. This creates the “brain” for the AI agent. Many people assume that this is the hard part.

Although we conceive of AI as primarily an artificial brain, the “body” of such an agent is just as important. This body will determine what can be learned from the environment and requires actuators that can modify that environment to create the learning conditions. It’s like how your eyes see what happens when you kick a ball towards a goal from different angles. When you see the results, you get better at scoring the goal.

Often, it’s the body rather than the brain that takes the most effort when building an AI tool. It’s what it means to really execute an AI-centred initiative, rather than adding a dash of ML to an existing project. As is probably evident, the complexity of these endeavours means the budget is less defined than with regular IT initiatives, and as we all know too well, the finance department tends to get the casting vote.

That said, there are ways to make AI projects more efficient, with specialised expertise being the primary tool. Instead of trawling through open-source libraries and applying standard algorithms – that is, the “existing project with a sprinkling of ML” approach – an experienced artificial intelligence engineer can build the body and mind you need to score the metaphorical goal. The initial investment is high, but you save months of development time. Time, after all, is money.

To spark your imagination, we’ll present the following case study where Outvise artificial intelligence engineer Jorge Davila Chacon worked with composer Alexander Schubert to create a machine learning tool for an experimental music composition. The results were certainly fascinating and it demonstrates the endless possibilities that generative models present.

A classical string ensemble, but not as you know it

The year 2020 was the 250th anniversary of the birth of Beethoven. To mark the occasion, contemporary art organisation PODIUM Esslingen commissioned a series of projects titled #BeBeethoven. One of these projects was by experimental composer Alexander Schubert and musicians Ensemble Resonanz. They initiated a collaboration with IRCAM, a French institute dedicated to research in the fields of avant-garde and electro-acoustical art music, and Jorge, artificial intelligence engineer, to build a generative AI model to create a never-before-seen music performance.

Alex’s vision was to create an AI-generated composition that explored the friction between machine perception and human perception, and their associated restrictions. In essence, to create a classical music performance perceived by a machine. This conceptual framework was an interesting starting point for Jorge as an artificial intelligence engineer, as within a creative process, it’s not possible to define the desired output. It was a challenge, but it meant experimentation was really possible.

Jorge was tasked with the creation of the visual element. While ICRAM engineers generated audio samples from models that learned from the voice of the ensemble of musicians, the tool Jorge created produced a fusion of avatars inspired by the images of the performers. More concretely, Jorge’s task was to design a neural architecture to generate images and a graphical user interface (GUI) to manipulate them semantically. However, in spite of the open-ended brief, even art projects have time constraints. Therefore, a fast iterative process was crucial to meet the deadline.

This process was particularly enlightening as the objective was to design an architecture that helped the composer to explore the generated images while reducing the training time by months. Jorge defined the requirements of each dataset based on the output of the previous model and repeated this cycle a few times. Once the output was satisfactory, Alexander composed the music and the videography.

Developing the brain

This project has some interesting constraints beyond the timeline. Primarily, the tool had to allow the reconstruction of a video input. These images had to be aesthetically interesting, as opposed to completely accurate while keeping the resolution as high as possible. The training data and features weren’t fixed in advance, and importantly, the brief was to explore the latent space, create new interpolations and control the image features to create these aesthetically interesting results.

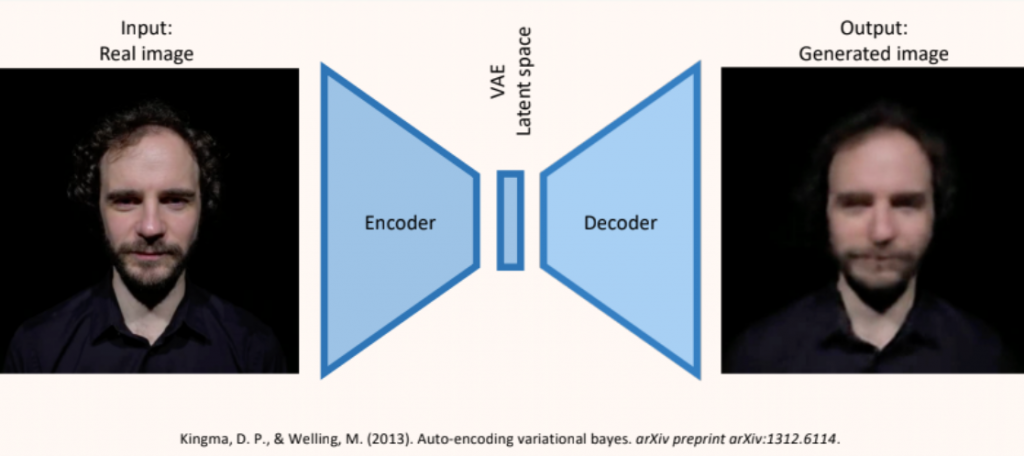

Considering these parameters, the first component of the tool was a Variational AutoEncoder (VAE), a subcategory of neural networks. VAE processes data via three steps: the encoder, where the image is turned to code; through the latent space, where it’s ‘read’; and finally through the decoder, where the image is reconstructed. A very reduced way of describing this is likening it to image compression. The program reads the file, takes it apart, and puts it back together again to create a smaller file. It’s worth noting here that a Variational AutoEncoder was selected over a traditional AutoEncoder as the latent space is smoother and more continuous, and crucially, can produce image transitions that are more natural.

However, Jorge was trying to achieve something far more elaborate than merely compressing a video file. He was trying to get the AI to create unreal scenarios via the information that it was fed; that is, produce images that, in reality, were never filmed. To do this, he merged two algorithmic approaches so that the model had to learn the general image features once, and later, the user searched the specific image features that best represented the desired transformations (e.g. hairstyle, face expression, a type of arm movement, etc). In short, the machine had to learn meaningful signs in order to produce a proper representation at the decoding stage.

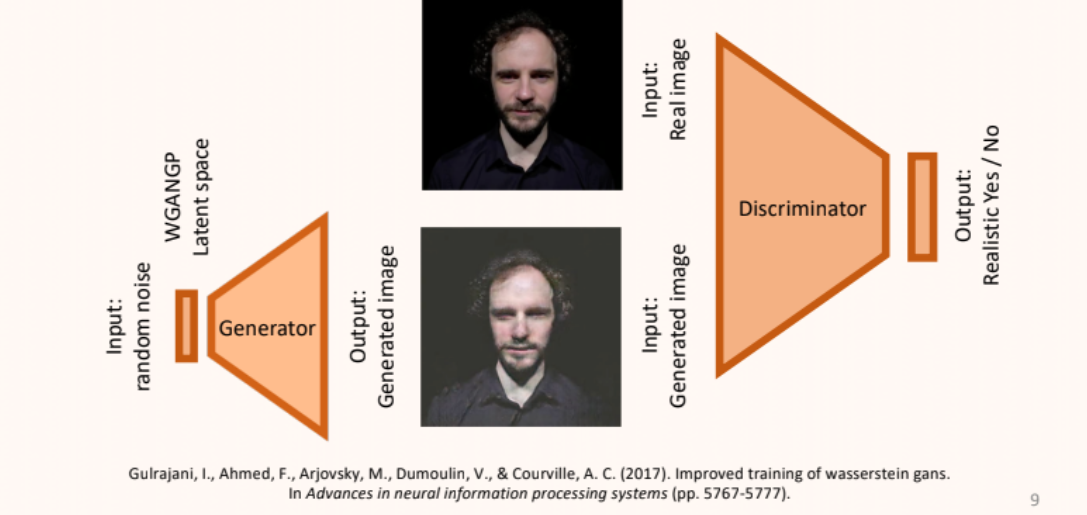

To do this, he implemented a General Adversarial Network (GAN), which is a competitive machine learning algorithm. Essentially, two networks are trained to compete: one that’s trying to create the image and another that’s trying to spot the mistakes. These are particularly useful considering this project’s objectives, as they’re capable of producing very detailed pictures.

There are various GAN models that could have been chosen, with their own advantages and drawbacks:

- DCGANs (the first GAN using CNNs): Can capture more high-frequency components in the images, but are unstable and data-hungry.

- WGAN: Improves stability during learning (the algorithm converges more often to a good optimum).

- WGAN GP: Reduces the amount of data needed to train the network.

- Pix2Pix: Can produce interpolations and domain transfer, but is more data-hungry.

- CycleGANs: Performs style transfer without the need for lots of paired transfers between both domains.

Based on this analysis, Jorge opted for a Wasserstein GAN with Gradient Penalty (WGAN GP). In short, this tool is trained to create increasingly realistic images in the latent space by analyzing the real and generated images and spotting which one is fake. Over time, this gets harder and harder for the GAN as the model continuously refines itself.

This tool was paired with the VAE to create a custom architecture that could find new combinations and interpolations to create unseen results. Through three different datasets (facial expressions, violin players and cello players) the architecture could create avatars. This is what constituted the brain of the AI project.

Building the body

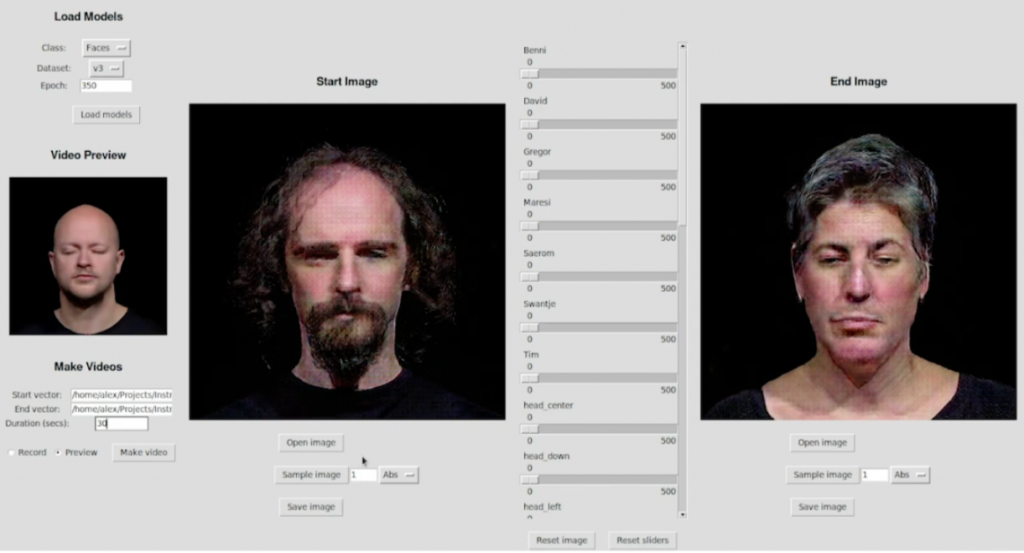

The next task was the body. Jorge needed to create a user-friendly GUI for Alex so that he could manipulate the parameters within the latent space in order to create aesthetically interesting images. This, in many ways, is where the artificial intelligence engineer was really going to come into their own. By creating a “body” that enabled the AI brain to realise an objective, they truly can actualise the role of artificial intelligence in software. In this case, it was an aesthetically interesting visual effect to accompany the music.

To do this, Jorge created a platform where Alex could manipulate the parameters in the latent space using a series of sliders. These parameters relate to the raw data, including footage of the different musicians, their facial expressions and positions, and so on. Using these sliders, it’s possible to splice images, creating an unreal avatar from the data. Thus, the platform gave Alex the control to create an aesthetically interesting visual element to go with his composition, while maintaining the conceptual basis of the project.

The results were certainly remarkable. The composition is eerie and unreal, allowing the viewer to step into the world of classical music as it’s ‘perceived’ by a machine. The work was received to great critical acclaim, winning the prestigious Golden Nica at the Prix Ars Electronica 2021. Alex and the team were honoured to have been invited to participate in this project and it was a fantastic opportunity to test the limits of the technology and our expertise.

Find an artificial intelligence engineer

What this project illustrates is the value of a bespoke tool created by an artificial intelligence engineer. This architecture – and perhaps most crucially the “body” interface that was developed – couldn’t have been produced within the timeline without the customised architecture. Therefore, it’s well worth the initial investment. In a business context, this efficiency and efficacy would be the foundations of a strong business case. Instead of spending time developing something in-house that ultimately isn’t fit for purpose, you get something that works.

But where can you find an artificial intelligence engineer to make your ideas become a reality? Surely, in such a niche field, these experts are difficult to come across? The answer is Outvise – and it’s for this reason that specialised platforms like it are so important. With a certified network of experts, companies can connect with an artificial intelligence specialist to create a custom project in less than 48 hours.

No matter your idea, location, industry or scale, there is an AI tool waiting to be unlocked. Deploying AI is the next major step of digital transformation, and those that aren’t exploring its potential will be those that are left behind. Explore the Outvise portfolio and start consulting with an expert today. As Alex’s project demonstrates, a little imagination (and a lot of expertise) goes a long way.

Dr. Jorge Davila-Chacon has ample experience in data science projects involving text, audio and visual data. He has designed Reinforcement Learning models that learn by direct customer interaction and generative models for the translation of image domains. Recently, he collaborated with the project Convergence which won the top prize for 2021 at the Prix Ars Electronica, the world’s most time-honored media arts competition. Jorge is Lecturer at the Hamburg School of Business Administration (HSBA) and is co-founder of Heldenkombinat Technologies GmbH, where he designs and implements Al solutions for the industry. Jorge has participated in robotics and neuroscience programs with the Massachusetts Institute of Technology (MIT), Tsinghua University (Beijing), the Stem Cell and Brain Research Institute (CNRS, France), the Speech, Music and Hearing Institute (KTH, Sweden) and the University of Hyderabad. He is an invited reviewer for the Journal of Computer Speech and Language, the journal Robotics and has been an organizing committee chair for ICANN, the European flagship conference for artificial neural networks.

No comments yet

There are no comments on this post yet.